Uncovering user insights with machine learning

Table of Contents

As a product person, uncovering user insights is a critical part of our jobs, and as a product person from an engineering background, I’ve always wanted to leverage machine learning to do the analysis for us. I already use a few off-the-shelf tools during my job. One tool that I’ve extensively used in the past is Olvy, which does a good job of summarising insights and showing sentiment over time.

But the engineering half of my brain always wanted to do this myself, just for the sake of understanding how stuff works and keeping my engineering claws sharp. This led me down a path of dabbling around with some NLP models on Google Colab.

The challenge: Making sense of user feedback at scale

For any Product Manager, making sense of user feedback at scale is a challenge, especially when you have 100s of thousands of downloads, and the reviews are pouring in. Generally, product teams will need to understand:

- What features were users loving or hating?

- What bugs or issues were most frequently reported?

- How did sentiment differ between platforms?

- Are there hidden patterns in the feedback that we were missing?

Collecting the data

The first step was gathering all our reviews in one place. For this exercise, I decided to pull data from Reddit, Google Play and App Store for a mobile app. I used existing Python libraries to pull data from multiple sources:

- App Store: Used the app-store-scraper package

- Google Play: Leveraged the google-play-scraper library

- Reddit: Collected mentions with the praw (Python Reddit API Wrapper)

A simple script like this did the trick for Google Play:

from google_play_scraper import Sort, reviews

# Fetch reviews

result, continuation_token = reviews(

'com.yourapp.name',

lang='en',

country='us',

sort=Sort.NEWEST,

count=1000

)

After collecting data from all sources, I combined them into a single dataset with columns for the review text, source, and any ratings information.

The ML exploration: Zero-shot magic

I’m not a machine learning engineer, but I was determined to find an accessible approach. After some research, I decided to go with a zero-shot classification – which requires no labeled training data! This was perfect since I didn’t have the time to manually label thousands of reviews.

I put together a Python notebook in Google Colab and started experimenting. Here’s the core part that performs the classification:

from transformers import pipeline

# Define common app review categories

CATEGORIES = [

'Bug/Issue',

'Feature Request',

'UI/UX',

'Performance',

'Pricing',

'Customer Support',

'Positive Feedback',

'Negative Feedback'

]

# Load zero-shot classifier

classifier = pipeline("zero-shot-classification",

model="facebook/bart-large-mnli")

# Classify a review

result = classifier(

"The app keeps crashing when I try to upload photos",

CATEGORIES,

multi_label=True

)

# View results

print(f"Top category: {result['labels'][0]}")

print(f"Confidence: {result['scores'][0]:.2f}")

I had no idea how this was going to turn out, but given that these are basic reviews, I figured I should get something that works for an experiment. To my surprise, it worked remarkably well! The model correctly identified bug reports, feature requests, and UI complaints with impressive accuracy. One of these days I will try a pre-trained model that is more specialized for this task.

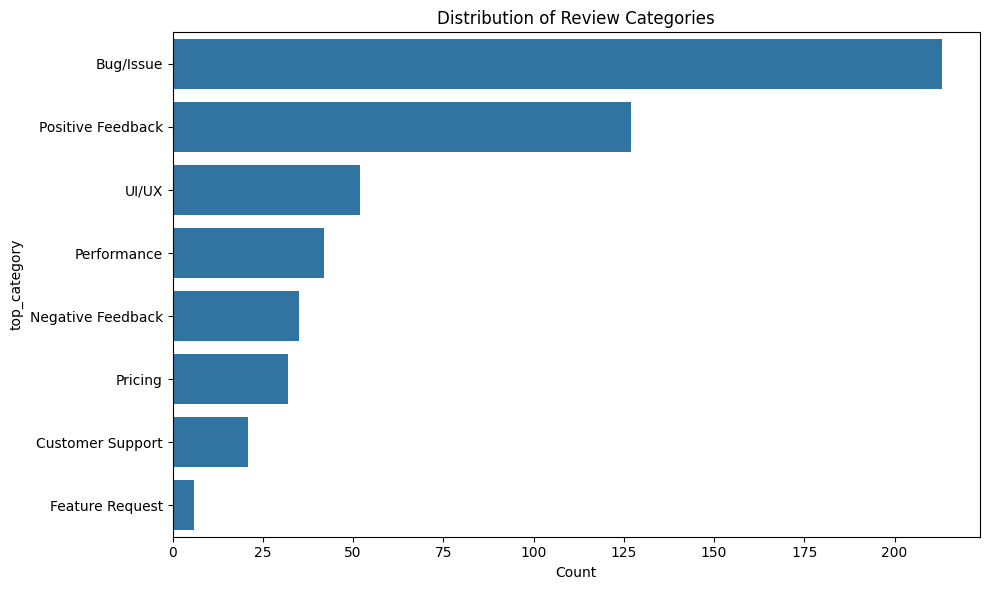

Categories identified by zero-shot classifier

Handling scale: Batch processing

My initial attempts hit a roadblock when processing our full dataset – the classifier would time out or run out of memory. I learned to process reviews in smaller batches:

batch_size = 10

results = []

for i in range(0, len(demo_texts), batch_size):

batch_texts = demo_texts[i:i+batch_size]

print(f"Processing batch {i//batch_size + 1}/{len(demo_texts)//batch_size + 1}...")

batch_results = []

for text in batch_texts:

if isinstance(text, str) and text.strip():

result = classifier(text, CATEGORIES, multi_label=True)

batch_results.append(result)

results.extend(batch_results)

This approach allowed me to process hundreds of reviews without hitting resource limits.

Adding sentiment analysis: The emotional layer

Categories were a great start, but I also wanted to understand the emotional tone of our reviews. Were users frustrated with bugs, or merely reporting them? Were feature requests enthusiastic or demanding?

Adding a sentiment analysis component was surprisingly simple:

sentiment_analyzer = pipeline("sentiment-analysis")

sentiment_result = sentiment_analyzer("I love this app but wish it had dark mode")[0]

print(f"Sentiment: {sentiment_result['label']}, Score: {sentiment_result['score']:.2f}")

Now I have my toolkit ready with the combination of category classification and sentiment analysis which will give us a richer understanding of our user feedback.

The “Aha!” moments: Unexpected insights

As the visualization charts started appearing in my notebook, several insights immediately jumped out:

Platform differences: Google Play users reported more bugs, while App Store users made more feature requests.

Hidden pain points: Performance issues had the highest negative sentiment, even though they weren’t the most common complaint. This highlighted an area needing urgent attention.

Praise patterns: Users frequently praised the UI of the app but complained about loading times. This would essentyially help the product team to prioritize performance optimizations while preserving the design our users loved.

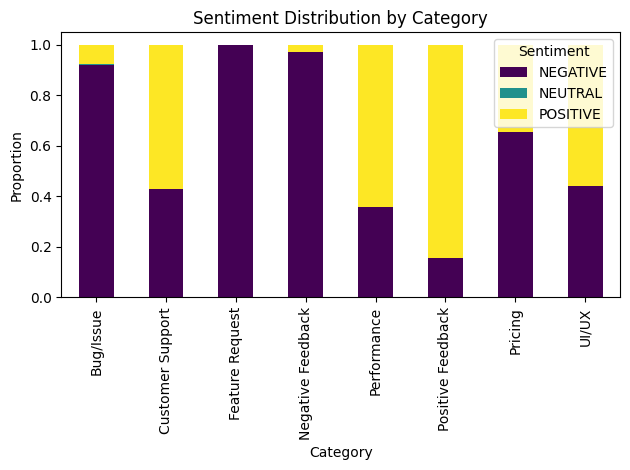

One visualization particularly stood out – a stacked bar chart showing sentiment distribution across categories:

category_sentiment = pd.crosstab(

all_reviews['top_category'],

all_reviews['sentiment'],

normalize='index'

)

category_sentiment.plot(kind='bar', stacked=True, colormap='viridis')

plt.title('Sentiment Distribution by Category')

This single chart helps to identify which categories of feedback deserved immediate attention based on their negative sentiment ratio.

Sentiment distribution by category

Practical impact: From data to decisions

Armed with this analysis, the product teams should be able to make more data-driven decisions:

- Prioritize fixing performance issues over adding new features

- Identify that our pricing complaints were mostly coming from a specific user segment

- Discover a pattern of UI confusion in a recently updated feature

Lessons learned: Tips for Product Managers

If you’re considering a similar approach, here are some lessons from my experience:

Start small: Process a sample of reviews first to validate your approach before scaling up.

Choose categories carefully: I experimented with different category sets before finding the right granularity.

Consider context: Some reviews need human interpretation (sarcasm, mixed feedback) – use ML as a tool, not a replacement for reading important reviews.

Track over time: The real value comes from tracking how sentiment and categories change after product updates.

Share insights broadly: These visualizations are powerful tools when communicating with executives and engineering teams.

The road ahead: Building on the foundation

This exploration took me a few hours of trial-and-error, but running this takes a few minutes, with GPU enabled on Google Colab. Making this part of a product development process should be extremely simple. Ideally product teams should:

- Integrate a similar process as part of the product analytics to connect review sentiment with user behaviors

- Set up alerts when certain categories of feedback spike

- Create an internal dashboard for all team members to access insights

What started as a curious exploration into machine learning for me became an essential tool for understanding users at scale. The ability to quickly classify and analyze thousands of reviews will fundamentally change how teams prioritize their product roadmap.

The next time you’re drowning in user feedback, remember that modern ML tools are more accessible than ever. Sometimes, the most valuable product insights are hidden in plain sight – you just need the right tools to find them.